Laravel in Kubernetes Part 2 - Dockerizing Laravel

In this part of the series, we are going to Dockerise our Laravel application with different layers, for all the different technical pieces of our application (FPM, Web Server, Queues, Cron etc.)

We will do this by building layers for each process, copy in the codebase, and build separate containers for them.

TLDR;

Table of contents

Prerequisites

- A Laravel application. You can see Part 1 if you haven't got an application yet

- Docker running locally

Getting started

Laravel 8.0 ships with Sail, which already runs Laravel applications in Docker, but it is not entirely production ready, and might need to be updated according to your use case and needs for sizing, custom configs etc. It only has a normal PHP container, but we might need a few more containers for production.

We need a FPM container to process requests, a PHP CLI container to handle artisan commands, and for example running queues, and an Nginx container to serve static content etc.

As you can already see, simply running one container would not serve our needs, and doesn't allow us to scale or manage different pieces of our application differently from the others.

In this post we'll cover all of the required containers, and what each of them are specialised for.

Why wouldn't we use the default sail container

The default sail container contains everything we need to run the application, to the point where it has too much for a production deployment.

For local development it works well out of the box, but for production deployment using Kubernetes, it's a bit big, and has too many components installed in a single container.

The more "stuff" installed in a container, the more places there are to attack and for us to manage. For our our Kubernetes deployment we are going to split out the different parts (FPM, Nginx, Queue Workers, Crons etc.).

Kubernetes filesystem

One thing we need to look into first, is the Kubernetes filesystem.

By default, you can write thing to files on a local drive to run things like logs and sessions.

When moving toward Kubernetes, we start playing in the field of distributed applications, and a local filesystem no longer suffices.

If you think about sessions for example. If we have 2 Kubernetes pods, we need to reach for the same one for recurring requests from the same user, otherwise the session might not exist.

With that in mind we need to make a couple updates to our application in preparation of Dockerizing the system.

We will also eventually secure our application with a readonly filesystem, to prevent localised logic.

Logging Update

One thing we need to do before we start setting up our Docker containers, is to update the logging driver to output to stdout, instead of to a file.

Being able to run kubectl logs and getting application logs is the primary reason for updating to use stdout. If we log to a file, we would need to cat the log files and that makes it a bunch more difficult.

So let's update the logging to point at stdout.

In the application configuration config/logging.php , add a new log channel for stdout

return [

'channels' => [

'stdout' => [

'driver' => 'monolog',

'level' => env('LOG_LEVEL', 'debug'),

'handler' => StreamHandler::class,

'formatter' => env('LOG_STDOUT_FORMATTER'),

'with' => [

'stream' => 'php://stdout',

],

],

],

],Next, update your .env file to use this Logger

LOG_CHANNEL=stdoutThe application will now output any logs to stdout so we can read it directly.

Session update

Sessions also use the local filesystem by default, and we want to update this to use Redis instead, so all pods can reach for the same session database, along with our Cache.

In order to do this for sessions, we need to install the predis/predis package.

We can install it from local composer, or simply add it to the composer.json file, and then Docker will take care of installing it.

$ composer require predis/predisOr if you prefer, simply add it to the require list in composer.json

{

"require": {

[...]

"predis/predis": "^1.1"Also, update the .env to use Redis for sessions

SESSION_DRIVER=redisHTTPS for production

Because we are going to expose our application and add Let's Encrypt certificates, we also need to force HTTPS for production.

When the request actually reaches our applications, it will be an http request, as TLS terminates at the Ingress.

We need to therefor force HTTPS urls for our application.

When our application serves html pages for example, it will add the URLS to css files using http if the request is http. We need to force https, so all the urls in our html are https.

In the app/Providers/AppServiceProvider.php file, in the boot method, force https for production.

<?php

namespace App\Providers;

# Add the Facade

use Illuminate\Support\Facades\URL;

use Illuminate\Support\ServiceProvider;

class AppServiceProvider extends ServiceProvider

{

/** All the rest */

public function boot()

{

if($this->app->environment('production')) {

URL::forceScheme('https');

}

}

}

This will force any assets served in production to be requested from an https domain, which our application will have.

Docker Containers

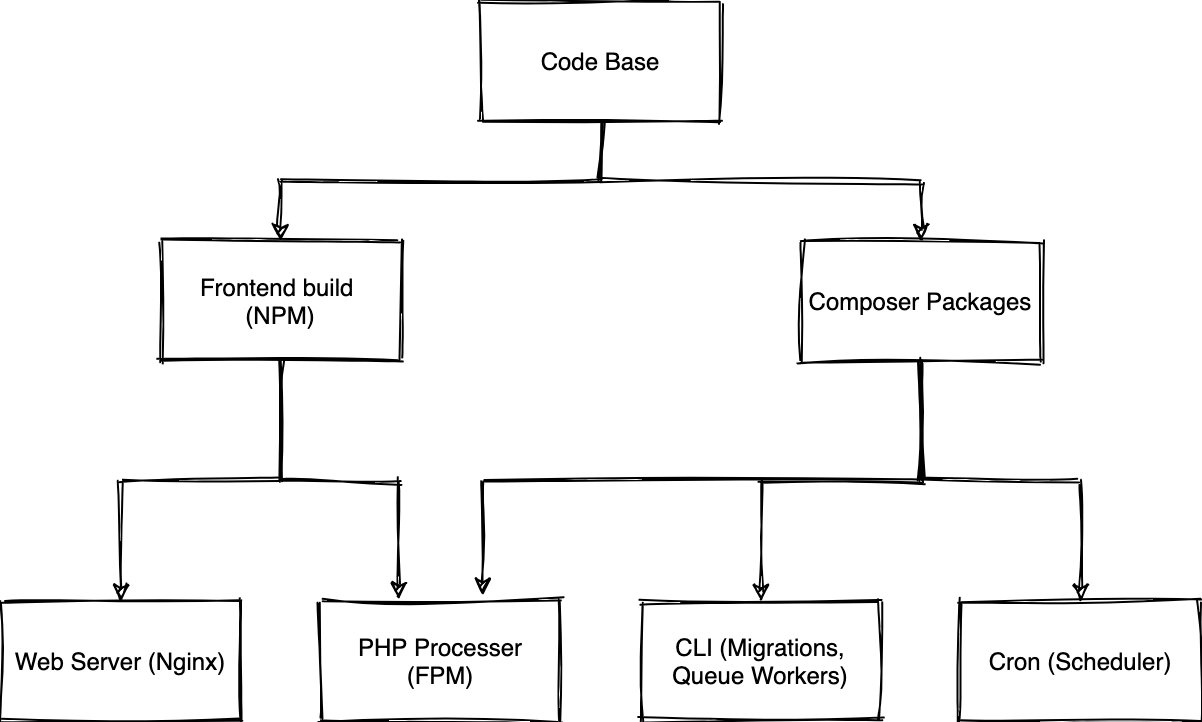

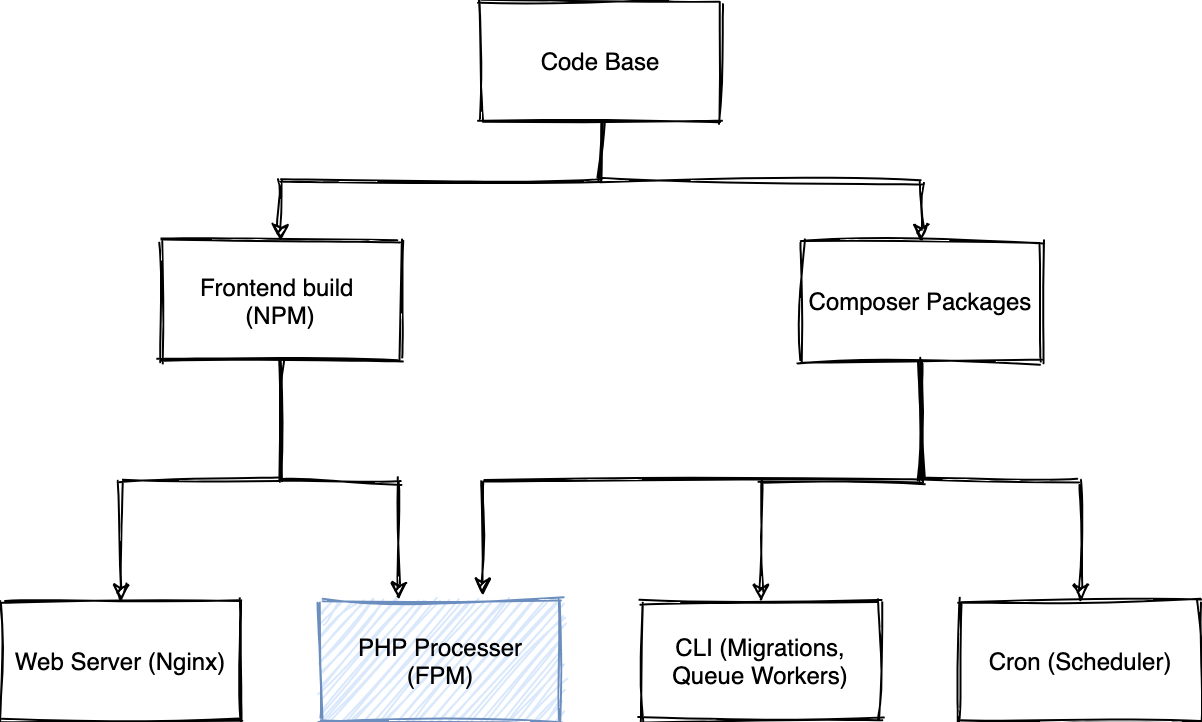

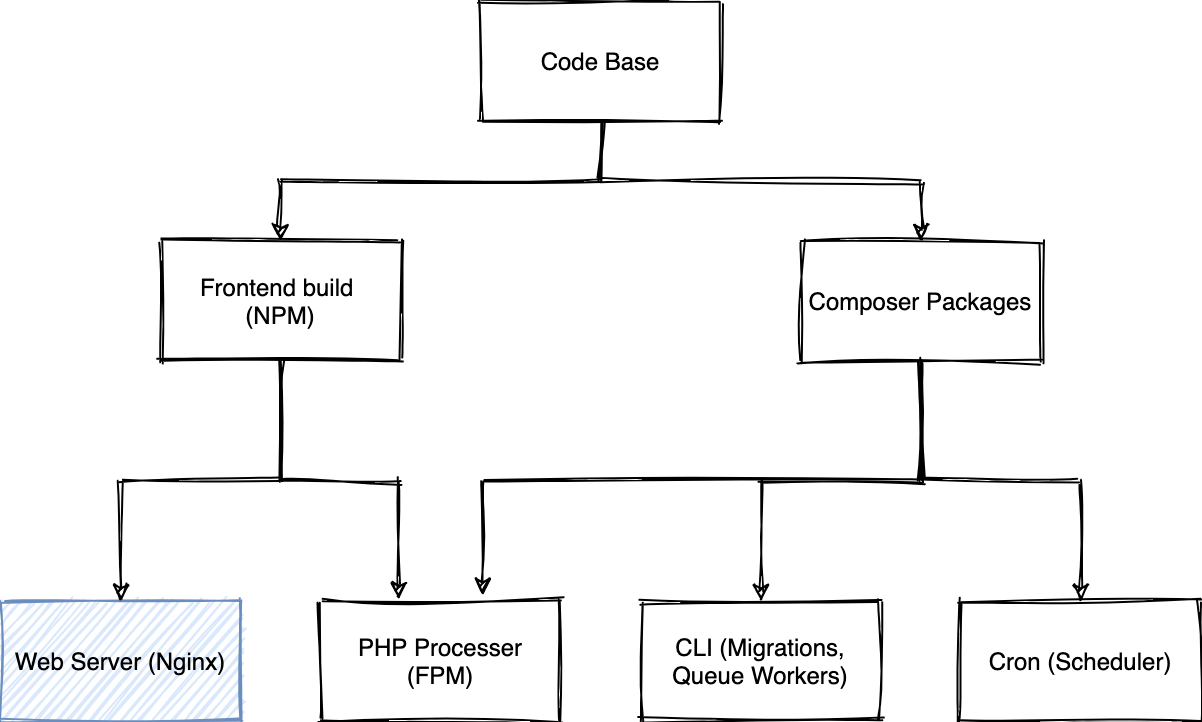

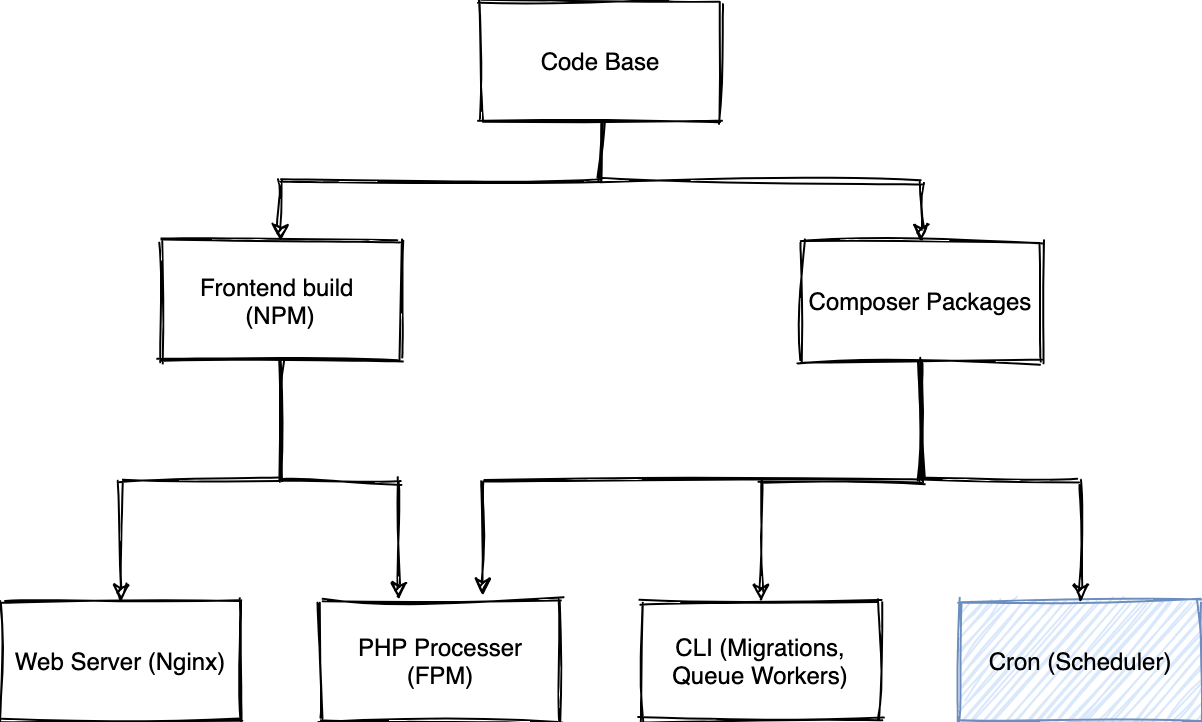

We want to create multiple containers for our application, but we want to use the same base pieces for different pieces, which specialise in specific pieces.

Our container structure looks a bit like the below diagram.

We will use Docker Multi Stage Builds to achieve each of the different pieces of the diagram

We will start with the 2 base images (NPM, Composer), and then build out each of the custom pieces.

The .dockerignore file

We will start by adding a .dockerignore file so we can prevent Docker from copying in the node_modules and the vendor directory, as we want to build any binaries for the specific architecture in the image.

In the root of your project, create a file called .dockerignore with the following contents

/vendor

/node_modulesThe Dockerfile

We need to create a Dockerfile in the root of our project, and setup some reusable pieces.

In the root of your project, create a file called Dockerfile.

$ touch DockerfileNext, create 2 variables inside the Dockerfile to contain the PHP packages we require.

We'll use two variables. One for built-in extensions, and one for extensions we need to instal using pecl.

# Create args for PHP extensions and PECL packages we need to install.

# This makes it easier if we want to install packages,

# as we have to install them in multiple places.

# This helps keep ou Dockerfiles DRY -> https://bit.ly/dry-code

# You can see a list of required extensions for Laravel here: https://laravel.com/docs/8.x/deployment#server-requirements

ARG PHP_EXTS="bcmath ctype fileinfo mbstring pdo pdo_mysql tokenizer dom pcntl"

ARG PHP_PECL_EXTS="redis"If your application needs additional extensions installed, feel free to add them to the list before building.

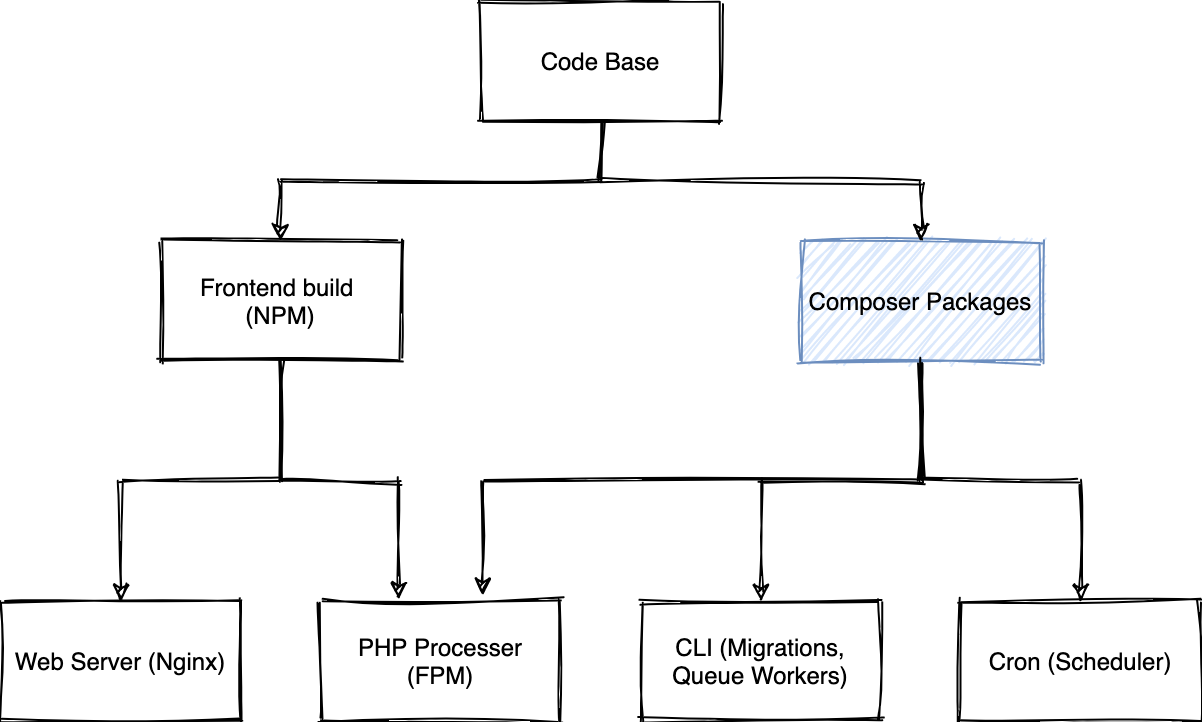

Composer Stage

We need to build a Composer base, which contains all our code, and installed Composer dependencies.

This will set us up for all the following stages to reuse the Composer packages.

Once we have build the Composer base, we can build the other layers from that, only using the specific parts we need.

We start with a Composer image which is based of php-8 in an alpine distro image.

This will help us install dependencies of our application.

In our Dockerfile, we can add the Composer stage (This goes directly after the previous piece)

# We need to build the Composer base to reuse packages we've installed

FROM composer:2.1 as composer_base

# We need to declare that we want to use the args in this build step

ARG PHP_EXTS

ARG PHP_PECL_EXTS

# First, create the application directory, and some auxilary directories for scripts and such

RUN mkdir -p /opt/apps/laravel-in-kubernetes /opt/apps/laravel-in-kubernetes/bin

# Next, set our working directory

WORKDIR /opt/apps/laravel-in-kubernetes

# We need to create a composer group and user, and create a home directory for it, so we keep the rest of our image safe,

# And not accidentally run malicious scripts

RUN addgroup -S composer \

&& adduser -S composer -G composer \

&& chown -R composer /opt/apps/laravel-in-kubernetes \

&& apk add --virtual build-dependencies --no-cache ${PHPIZE_DEPS} openssl ca-certificates libxml2-dev oniguruma-dev \

&& docker-php-ext-install -j$(nproc) ${PHP_EXTS} \

&& pecl install ${PHP_PECL_EXTS} \

&& docker-php-ext-enable ${PHP_PECL_EXTS} \

&& apk del build-dependencies

# Next we want to switch over to the composer user before running installs.

# This is very important, so any extra scripts that composer wants to run,

# don't have access to the root filesystem.

# This especially important when installing packages from unverified sources.

USER composer

# Copy in our dependency files.

# We want to leave the rest of the code base out for now,

# so Docker can build a cache of this layer,

# and only rebuild when the dependencies of our application changes.

COPY --chown=composer composer.json composer.lock ./

# Install all the dependencies without running any installation scripts.

# We skip scripts as the code base hasn't been copied in yet and script will likely fail,

# as `php artisan` available yet.

# This also helps us to cache previous runs and layers.

# As long as comoser.json and composer.lock doesn't change the install will be cached.

RUN composer install --no-dev --no-scripts --no-autoloader --prefer-dist

# Copy in our actual source code so we can run the installation scripts we need

# At this point all the PHP packages have been installed,

# and all that is left to do, is to run any installation scripts which depends on the code base

COPY --chown=composer . .

# Now that the code base and packages are all available,

# we can run the install again, and let it run any install scripts.

RUN composer install --no-dev --prefer-distTesting the Composer Stage

We can now build the Docker image and make sure it builds correctly, and installs all our dependencies

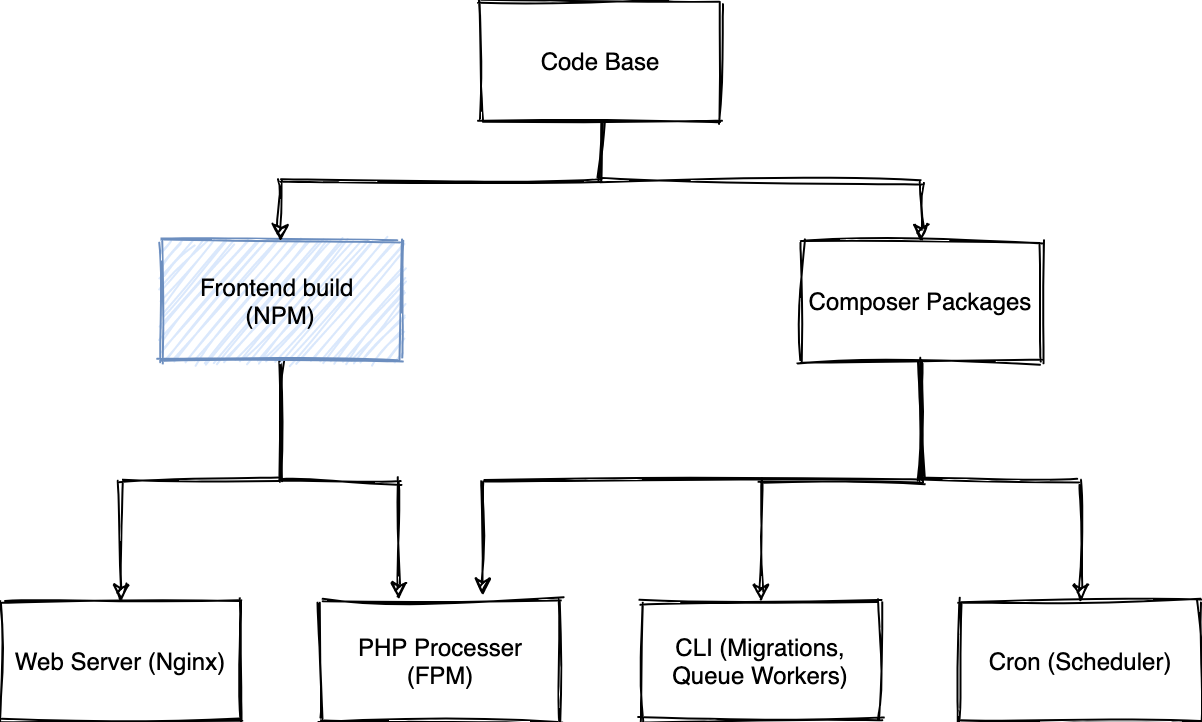

docker build . --target composer_baseFrontend Stage

We need to install the NPM packages as well, so we can run any compilations for Laravel Mix as well.

Laravel Mix is an NPM package, so we also need a container which we can use to compile the dependencies to the public directory.

Usually you run this just using npm run prod, and we need to convert this to a Docker Stage.

In the Dockerfile, we can add the next stage for NPM

# For the frontend, we want to get all the Laravel files,

# and run a production compile

FROM node:14 as frontend

# We need to copy in the Laravel files to make everything is available to our frontend compilation

COPY --from=composer_base /opt/apps/laravel-in-kubernetes /opt/apps/laravel-in-kubernetes

WORKDIR /opt/apps/laravel-in-kubernetes

# We want to install all the NPM packages,

# and compile the MIX bundle for production

RUN npm install && \

npm run prodTesting the frontend stage

Let's build the frontend image to make sure it builds correctly, and doesn't fail along the way

$ docker build . --target frontend

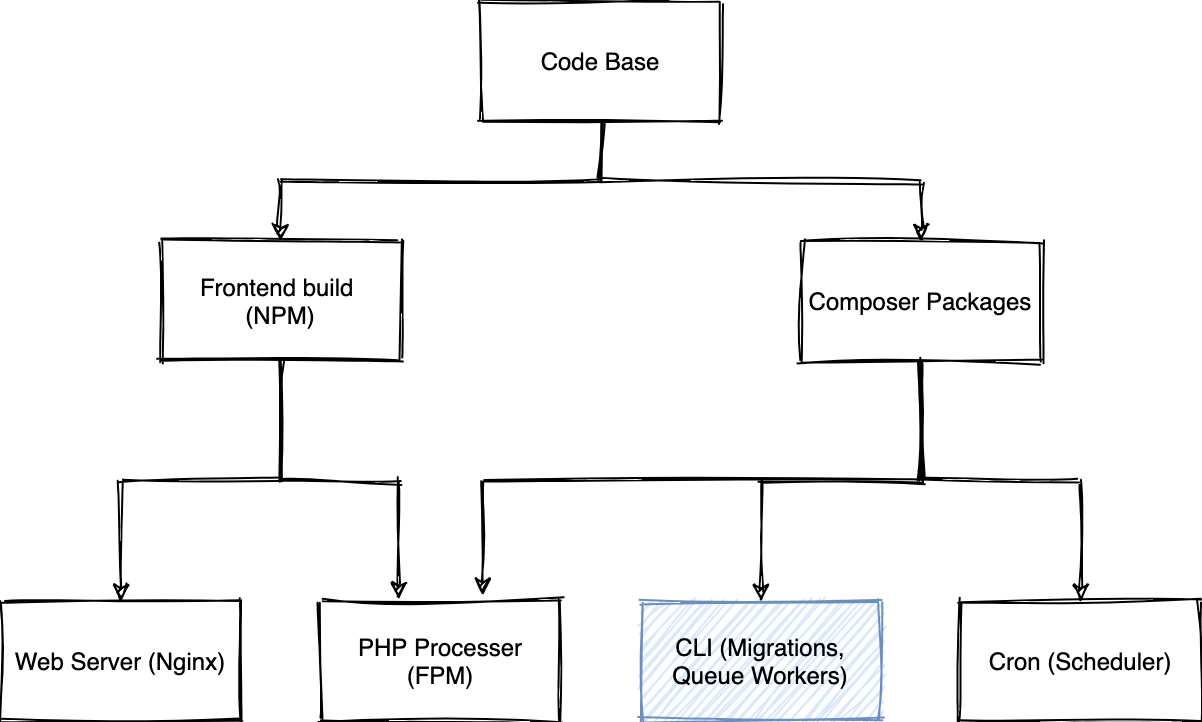

CLI Container

We are going to need a CLI container to run Queue jobs, Crons (The Scheduler), Migrations, and Artisan commands when in Docker / Kubernetes

In the Dockerfile add a new piece for CLI usage.

# For running things like migrations, and queue jobs,

# we need a CLI container.

# It contains all the Composer packages,

# and just the basic CLI "stuff" in order for us to run commands,

# be that queues, migrations, tinker etc.

FROM php:8.0-alpine as cli

# We need to declare that we want to use the args in this build step

ARG PHP_EXTS

ARG PHP_PECL_EXTS

WORKDIR /opt/apps/laravel-in-kubernetes

# We need to install some requirements into our image,

# used to compile our PHP extensions, as well as install all the extensions themselves.

# You can see a list of required extensions for Laravel here: https://laravel.com/docs/8.x/deployment#server-requirements

RUN apk add --virtual build-dependencies --no-cache ${PHPIZE_DEPS} openssl ca-certificates libxml2-dev oniguruma-dev && \

docker-php-ext-install -j$(nproc) ${PHP_EXTS} && \

pecl install ${PHP_PECL_EXTS} && \

docker-php-ext-enable ${PHP_PECL_EXTS} && \

apk del build-dependencies

# Next we have to copy in our code base from our initial build which we installed in the previous stage

COPY --from=composer_base /opt/apps/laravel-in-kubernetes /opt/apps/laravel-in-kubernetes

COPY --from=frontend /opt/apps/laravel-in-kubernetes/public /opt/apps/laravel-in-kubernetes/publicTesting the CLI image build

We can build this layer to make sure everything works correctly

$ docker build . --target cli

[...]

=> => writing image sha256:b6a7b602a4fed2d2b51316c1ad90fd12bb212e9a9c963382d776f7eaf2eebbd5 The CLI layer has successfully built, and we can move onto the next layer

FPM Container

We can now also build out the specific parts of the application, the first of which is the container which runs fpm for us.

In the same Dockerfile, we will create another stage to our docker build called fpm_server with the following contents

# We need a stage which contains FPM to actually run and process requests to our PHP application.

FROM php:8.0-fpm-alpine as fpm_server

# We need to declare that we want to use the args in this build step

ARG PHP_EXTS

ARG PHP_PECL_EXTS

WORKDIR /opt/apps/laravel-in-kubernetes

RUN apk add --virtual build-dependencies --no-cache ${PHPIZE_DEPS} openssl ca-certificates libxml2-dev oniguruma-dev && \

docker-php-ext-install -j$(nproc) ${PHP_EXTS} && \

pecl install ${PHP_PECL_EXTS} && \

docker-php-ext-enable ${PHP_PECL_EXTS} && \

apk del build-dependencies

# As FPM uses the www-data user when running our application,

# we need to make sure that we also use that user when starting up,

# so our user "owns" the application when running

USER www-data

# We have to copy in our code base from our initial build which we installed in the previous stage

COPY --from=composer_base --chown=www-data /opt/apps/laravel-in-kubernetes /opt/apps/laravel-in-kubernetes

COPY --from=frontend --chown=www-data /opt/apps/laravel-in-kubernetes/public /opt/apps/laravel-in-kubernetes/public

# We want to cache the event, routes, and views so we don't try to write them when we are in Kubernetes.

# Docker builds should be as immutable as possible, and this removes a lot of the writing of the live application.

RUN php artisan event:cache && \

php artisan route:cache && \

php artisan view:cacheTesting the FPM build

We want to build this stage to make sure everything works correctly.

$ docker build . --target fpm_server

[...]

=> => writing image sha256:ead93b67e57f0cdf4ec9c1ca197cf8ca1dacb0bb030f9f57dc0fccf5b3eb9904Web Server container

We need to build a web server image which is used to serve static content, and send any PHP requests to our PFM container.

This is quite important, as we can serve static content through our PHP app, but Nginx is a lot better at it than PHP, and can serve static content a lot more efficiently.

The first thing we need is a nginx configuration for our web server.

We'll also use a Nginx Template, so we can inject the FPM URL into the configuration when the container starts up.

Create a directory called docker in the root of your project

mkdir -p dockerInside of that folder, you can create a file called nginx.conf.template with the following content

server {

listen 80 default_server;

listen [::]:80 default_server;

# We need to set the root for our sevrer,

# so any static file requests gets loaded from the correct path

root /opt/apps/laravel-in-kubernetes/public;

index index.php index.html index.htm index.nginx-debian.html;

# _ makes sure that nginx does not try to map requests to a specific hostname

# This allows us to specify the urls to our application as infrastructure changes,

# without needing to change the application

server_name _;

# At the root location,

# we first check if there are any static files at the location, and serve those,

# If not, we check whether there is an indexable folder which can be served,

# Otherwise we forward the request to the PHP server

location / {

# Using try_files here is quite important as a security concideration

# to prevent injecting PHP code as static assets,

# and then executing them via a URL.

# See https://www.nginx.com/resources/wiki/start/topics/tutorials/config_pitfalls/#passing-uncontrolled-requests-to-php

try_files $uri $uri/ /index.php?$query_string;

}

# Some static assets are loaded on every page load,

# and logging these turns into a lot of useless logs.

# If you would prefer to see these requests for catching 404's etc.

# Feel free to remove them

location = /favicon.ico { access_log off; log_not_found off; }

location = /robots.txt { access_log off; log_not_found off; }

# When a 404 is returned, we want to display our applications 404 page,

# so we redirect it to index.php to load the correct page

error_page 404 /index.php;

# Whenever we receive a PHP url, or our root location block gets to serving through fpm,

# we want to pass the request to FPM for processing

location ~ \.php$ {

#NOTE: You should have "cgi.fix_pathinfo = 0;" in php.ini

include fastcgi_params;

fastcgi_intercept_errors on;

fastcgi_pass ${FPM_HOST};

fastcgi_param SCRIPT_FILENAME $document_root/$fastcgi_script_name;

}

location ~ /\.ht {

deny all;

}

location ~ /\.(?!well-known).* {

deny all;

}

}Once we have that completed, we can create the new Docker image stage which contains the Nginx layer

# We need an nginx container which can pass requests to our FPM container,

# as well as serve any static content.

FROM nginx:1.20-alpine as web_server

WORKDIR /opt/apps/laravel-in-kubernetes

# We need to add our NGINX template to the container for startup,

# and configuration.

COPY docker/nginx.conf.template /etc/nginx/templates/default.conf.template

# Copy in ONLY the public directory of our project.

# This is where all the static assets will live, which nginx will serve for us.

COPY --from=frontend /opt/apps/laravel-in-kubernetes/public /opt/apps/laravel-in-kubernetes/publicTesting the Web Server build

We can now build up to this stage to make sure it builds successfully.

$ docker build . --target web_server

[...]

=> => writing image sha256:1ea6b28fcd99d173e1de6a5c0211c0ba770f6acef5a3231460739200a93feef2 Cron container

We also want to create a Cron layer, which we can use to run the Laravel scheduler.

We want to specify crond to run in the foreground as well, and make it the primary command when the container starts up.

# We need a CRON container to the Laravel Scheduler.

# We'll start with the CLI container as our base,

# as we only need to override the CMD which the container starts with to point at cron

FROM cli as cron

WORKDIR /opt/apps/laravel-in-kubernetes

# We want to create a laravel.cron file with Laravel cron settings, which we can import into crontab,

# and run crond as the primary command in the forground

RUN touch laravel.cron && \

echo "* * * * * cd /opt/apps/laravel-in-kubernetes && php artisan schedule:run" >> laravel.cron && \

crontab laravel.cron

CMD ["crond", "-l", "2", "-f"]Testing the Cron build

We can build the container to make sure everything works correctly.

$ docker build . --target cron

=> => writing image sha256:b6fb826820e0669563a8746f83fb168fe39393ef6162d65c64439aa26b4d713b The Complete Build

In our Dockerfile, we now have 4 stages, composer_base, frontend, fpm_server, cli, and cron but we need a sensible default to build from.

Whenever we run the container then, it will start up with our default stage, and we have sensible and predictable results.

We can specify this right at the end of our Dockerfile, by specifying a last FROM statement with the default stage.

# [...]

FROM cliHardcoded values

You'll notice we've used a variable interpolation in the nginx.conf.template file for the fpm host.

# [...]

fastcgi_pass ${FPM_HOST};

# [...]The reason we've done this, is to replace the FPM host at runtime, as it will change depending on where we are running.

For Docker Compose, it will be the name of the fellow fpm container, but for Kubernetes it will be the name of the service created when running the FPM container.

Nginx 1.19 Docker images support using templates for nginx configurations where we can use environment variables.

It uses envsubst under the hood to replace any variables with ENV variables we pass in.

It does this when the container is started up.

Docker Compose

Next, we can test our Docker images locally by building a docker-compose file which runs each stage of our image together so we can use it in that way locally, and reproduce it when we get to Kubernetes

First step is to create a docker-compose.yml file.

Laravel Sail already comes with one prefilled, but we are going to change it up a bit to have all our separate containers running, so we can validate what will run in Kubernetes early in our cycle.

If you are not using Laravel Sail, and don't have a docker-compose.yml file in the root of your project, you can skip the part where we move it to a backup file.First thing we want to do is move the sail docker-compose file to a backup file called docker-compose.yml.backup.

Next, we want to create a base docker-compose.yml for our new image stages

version: '3'

services:

# We need to run the FPM container for our application

laravel.fpm:

build:

context: .

target: fpm_server

image: laravel-in-kubernetes/fpm_server

# We can override any env values here.

# By default the .env in the project root will be loaded as the environment for all containers

environment:

APP_DEBUG: "true"

# Mount the codebase, so any code changes we make will be propagated to the running application

volumes:

# Here we mount in our codebase so any changes are immediately reflected into the container

- '.:/opt/apps/laravel-in-kubernetes'

networks:

- laravel-in-kubernetes

# Run the web server container for static content, and proxying to our FPM container

laravel.web:

build:

context: .

target: web_server

image: laravel-in-kubernetes/web_server

# Expose our application port (80) through a port on our local machine (8080)

ports:

- '8080:80'

environment:

# We need to pass in the new FPM hst as the name of the fpm container on port 9000

FPM_HOST: "laravel.fpm:9000"

# Mount the public directory into the container so we can serve any static files directly when they change

volumes:

# Here we mount in our codebase so any changes are immediately reflected into the container

- './public:/opt/apps/laravel-in-kubernetes/public'

networks:

- laravel-in-kubernetes

# Run the Laravel Scheduler

laravel.cron:

build:

context: .

target: cron

image: laravel-in-kubernetes/cron

# Here we mount in our codebase so any changes are immediately reflected into the container

volumes:

# Here we mount in our codebase so any changes are immediately reflected into the container

- '.:/opt/apps/laravel-in-kubernetes'

networks:

- laravel-in-kubernetes

# Run the frontend, and file watcher in a container, so any changes are immediately compiled and servable

laravel.frontend:

build:

context: .

target: frontend

# Override the default CMD, so we can watch changes to frontend files, and re-transpile them.

command: ["npm", "run", "watch"]

image: laravel-in-kubernetes/frontend

volumes:

# Here we mount in our codebase so any changes are immediately reflected into the container

- '.:/opt/apps/laravel-in-kubernetes'

# Add node_modeules as singular volume.

# This prevents our local node_modules from being propagated into the container,

# So the node_modules can be compiled for each of the different architectures (Local, Image)

- '/opt/app/node_modules/'

networks:

- laravel-in-kubernetes

networks:

laravel-in-kubernetes:If we run these containers, we should be able to access the home page from localhost:8080

$ docker-compose up -dIf you now open http://localhost:8080, you should see your application running.

Our containers are now running properly. Nginx is passing our request onto FPM, and FPM is creating a response from our code base, and sending that back to our browser.

Our crons are also running correctly in the cron container. You can see this, by checking the logs for the cron container.

$ docker-compose logs laravel.cron

Attaching to laravel-in-kubernetes_laravel.cron_1

laravel.cron_1 | No scheduled commands are ready to run.Running Mysql in docker-compose.yml

We need to run Mysql in docker as well for local development.

Sail does ship with this by default, and if you check the docker-compose.yml.backup file, you will notice a mysql service, which we can copy over as exists, and add to our docker-compose.yml.

Docker Compose will automatically load the .env file from our project, and these are the values referenced in the docker-compose.yml.backup which Sail ships with

services:

[...]

mysql:

image: 'mysql:8.0'

ports:

- '${FORWARD_DB_PORT:-3306}:3306'

environment:

MYSQL_ROOT_PASSWORD: '${DB_PASSWORD}'

MYSQL_DATABASE: '${DB_DATABASE}'

MYSQL_USER: '${DB_USERNAME}'

MYSQL_PASSWORD: '${DB_PASSWORD}'

MYSQL_ALLOW_EMPTY_PASSWORD: 'yes'

volumes:

- 'laravel-in-kubernetes-mysql:/var/lib/mysql'

networks:

- laravel-in-kubernetes

healthcheck:

test: ["CMD", "mysqladmin", "ping", "-p${DB_PASSWORD}"]

retries: 3

timeout: 5s

# At the end of the file

volumes:

laravel-in-kubernetes-mysql:We can now run docker-compose up again, and Mysql should be running alongside our other services.

$ docker-compose up -dRunning migrations in docker-compose

To test out our Mysql service and that our application can actually connect to Mysql, we can run migrations in the FPM container, as it has all of the right dependencies.

$ docker-compose exec laravel.fpm php artisan migrate

Migration table created successfully.

Migrating: 2014_10_12_000000_create_users_table

Migrated: 2014_10_12_000000_create_users_table (35.78ms)

Migrating: 2014_10_12_100000_create_password_resets_table

Migrated: 2014_10_12_100000_create_password_resets_table (25.64ms)

Migrating: 2019_08_19_000000_create_failed_jobs_table

Migrated: 2019_08_19_000000_create_failed_jobs_table (30.73ms)

This means our application can connect to the database, and our migrations have been run.

With the volume we attached, we should be able to restart all of the containers, and our data will stay persisted.

Onto Kubernetes

Now that we have docker-compose running locally, we can move forward onto building our images and pushing them to a registry.